A tad more on the same tangent:

Perhaps you can make and playback a recording pushed into the red without clipping, but if you play 8 recordings that have hit red it will clip? So the meters would be telling us hey, if you keep this up with the rest of the tracks you’ll be clipping and have to turn down output stages?

Other tests with a 0 db Sine wave on 8 tracks. Track Level and Master Level limits to avoid clipping :

90 with Master Level at 0

108 with Master Level at - 11

127 with Master Level at - 19

Fascinating… could we perhaps be able to calculate the overall headroom with these parameters? If you can compensate the teack levels with the master fader, it would suggest a hard limit instead of a teack-by-track basis?

Another test with that 0db Sine :

Send Cue Out to Input AB with 2 cables.

Red light with

Cue = 127, Master Cue = 16, Gain =0

Checked Input with Noise Gate :

Red light is between - 12.3 db and - 11.2 db

Red light just before clipping with

Cue = 127, Master Cue = 63, gain = 3

(between - 3.3 and - 2.2 db)

Recorded. No clipping. The sine is near 0db

I feel like we’re getting closer to something…

If we actually could come up with a chart and equations where you could take your 8 tracks sample db levels, apply the attenuation equations for each parameter, and calculate the usage of available headroom, we should win the Octatrack Nobel Gain Prize…

Thanks sezare, those are important results confirming master level can compensate for track clipping, and that track level and master level have a combined affect on headroom, but not by the same value scaling…

@Open_Mike Check results above. I could really increase Master Cue (and gain) before clipping.

Red light : between - 12.3 db - 11.2 db

Clipping : between - 3.3 db - 2.2 db

I don’t know if noise gate db values are correct.

If I set my sine recording to 12.5 db instead of 12 db in Attibutes, clipping. So I think I’m very close to 0db.

For clarification to interpret your results,

Is your original 0db sine wave sample set to 0db gain in the attributes?

And it’s the recording of that sine that is set to +12db?

If so I think it would be interesting to compare sign waves with the +12db setting, even if we have to drop the original level, as to better compare the parameter values with those used for a recorded sample…

Yep, yep. 0riginal 0db. Recorded +12 db.

Waves are almost the same, 0db, but recording is +12 db louder, because of Attributes.

I upload my original sample, I found useful for level tests and clic tests (perfect loop at 120 bpm), and less anoying than 1000 Hz sine ! Right clic for download on windows.

SINE_256hz_2s_0db

SINE Recorded, peak at - 0.09 db

Ok, I just did some tests on my MKI and the results were not what I expected, and I think they illustrate some interesting things.

For a test signal, I used Reaper’s built in tone generator, fed through an oscilloscope plugin, and then out a pair of channels on a Black Lion Audio modded Digi002.

To calibrate, I fed the outputs back in to a pair of line inputs (operating at +4db) and made sure that the direct loop was as close to unity as I could get it. In the end, I settled on the test tone at -2dbfs (the hottest I could get it without clipping the inputs) and added .399db of makeup gain on the input to correct for a bit of signal loss (basically setting it by eye so the oscilloscope on the test tone channel pre-output visually matched the oscilloscope on the input).

After that, I patched the test tone output to inputs A and B on my Octatrack, and the main outputs back into Reaper. I started a new project, set it up to record A and B into record buffer 1 and simultaneously play back record buffer 1 through the main outs. I turned timestretch off, but otherwise started with the OT defaults.

With a -2dbfs signal on the inputs, the output was hard clipped at just above -12db:

In the OT audio editor, the recording was digitally clipping at 0dbfs:

Next, I turned the mixer level for in AB to -32 to see if that kept the signal from clipping (which would mean that the level controls in the mixer were actually adjusting the input gain in the analog domain, pre ADC). I didn’t expect to see any change in the clipping, and there wasn’t any, which means that boosting and cutting the input level in the mixer is happening in the digital domain, between the ADC and the record buffer. The signal level had been lowered digitally, but the clipping was identical:

Next, I returned the mixer settings to their defaults and reduced the level of the tone generator until the output from the OT was as close to clipping as it could get without actually clipping. I ended up with the test tone set to -8dbfs. With the OT’s gainstaging at default settings, the output was approximately 4db lower than the input:

The input LEDs were still pinned in the red, but the audio in the record buffer wasn’t clipped:

Next, I moved on to the AMP page, and this is where I got my most surprising result. With the test tone still at -8dbfs and the mixer level for he inputs at 0, increasing the level on the AMP page to +1 or +2 started to clip the signal right away. By the time it was up to +12, the output was clipped heavily, and was still peaking at just over -12db:

As far as I can deduce from this, the OT’s signal path is not floating point, which means it’s possible to clip it internally! I have no idea (and no clue how it would be possible to test) what the internal signal path is like, whether it’s all fixed point or whether individual processes are done in floating point and then dithered back to 24 bit, but I think it’s pretty safe to say that at least some parts of the internal signal path are fixed point and can be clipped. The takeaway here is that increasing the volume on the amp page (or, presumably, with effects) can digitally clip - it’s not like a modern DAW where everything is floating point and you have essentially unlimited internal headroom. This is a big deal in terms of how you approach gainstaging.

Next, to see if the track level control could also clip the signal path, I returned all of the track parameters to their defaults again, and then turned up the track level. What I ended up with was just shy of unity gain when the track level was all the way up to 127, which suggests that track level is cut-only. However, because the record buffer’s Gain attribute was set to the default of +12 for all tests, track level at 127 presumably corresponds to 12db below unity gain).

Default OT settings with test tone at -8dbfs and playback track level at 127:

Next, I went to the settings menu an adjusted the gate threshold to the highest setting that would open on both channels with the tone generator still at -8dbfs. The highest I could get it was -4.5, although input A wuld still pass with it set at -3.3 (so either my interface’s outputs aren’t matched as well as I’d like or the OT’s inputs aren’t). At any rate, the gate settings are pretty coarse but in this test, a gate threshold of -4.5 roughly corresponded to an input level of -8dbfs, which more or less corresponds to just below clipping the input. Obviously this is pretty far from lab conditions but since you’d never want to drive the inputs hot enough to reach 0dbfs anyway, it’s still accurate enough to be instructive, if only because it shows that the gate threshold doesn’t necessarily correspond to a decibel value (or if it does, it’s calibrated quite differently than the line outs on my interface).

Next, I adjusted the tone generator’s level and watched the input LEDs, to find out where the transition from orange to red happens. First, what I noticed is that there are 5 distinct colors: green, yellow, light orange, dark orange, and red - if you gradually increase the input level you can easily see where the LED flips over from one color to the next - it’s not a gradual transition. The other thing I noticed is that the threshold between one color and the next is pretty inconsistent. I did a bunch of slow level changing and what I found was that if you start in the red and decrease the level, the transition from red to orange is much lower than if you start low and increase the level until you go into the red. In my tests, if you start low and push the input level up at the source, it will hit the red somwhere between roughly -16db and -13db, but if you start on full and lower the input level it willl go from red to orange somewhere between roughly -18db and -22db! Sufce it to say, the LEDs are a rough guide at best, and in either direction the level where they were pinned in the red was at least 4-5db below clipping.

Just to see, I set the test tone to -20db and adjusted the OT’s gate as before, to the highest threshold that still passed both channels. What I got was a threshold of 15.7. From this and the previous gate experiment, I’m speculating that the gate numbers are approximately input level in +4. Since my interface is (nominally, at least) operating at a +4 line level, perhaps the OT’s inputs are calibrated to a nonstandard +0db line level, which would be a reasonable way for Elektron to assure that they worked acceptably with both +4 and -10 equipment.

Finally, I returned the OT to its default gainstaging once more, returned my test tone to -8dbfs (which according to my tests corresponded to just below 0dbfs in the record buffer) and increased the master level in the OT mixer until I achieved unity gain, which happened at +12.

Soo, that’s a whole lot of information that may or may not be useful, but my main takeaways are:

- A -8dbfs signal at +4 line level corresponds to 0dbfs (without clipping) at the OT’s ADC. Where the OT’s -12db pad falls in this equation I don’t know.

- A gate threshold of -4.5 corresponds to just about the hottest signal that you can safely record into the OT without clipping

- It is possible to digitally clip the OT’s internal signal path using the AMP level, and therefore AMP level should be used for cutting more than boosting and is the first thing to check if a signal is clipping but the inputs still have headroom to spare.

- From the OT default settings, increasing a track’s level to 127 should result in unity gain.

- From the default OT settings, increasing the master to +12 should also result in unity gain.

- Track level appears to be cut-only. I was unable to clip a signal that was hitting 0dbfs in the track recorder by increasing the track level.

- Don’t pay much attention to the LEDs and definitely don’t worry about keeping them in the green! Yellow to light orange is probably the sweet spot but even in the red there’s headroom to spare.

Another test I didn’t think of would be to set up something similar (a test tone on the OT inputs that was peaking at or just below gate threshold -4.5, recorded into a track buffer and played back from a flex machine), but put an analog pad of some kind (basically a resistor or pot, something that couldn’t distort) between the OT outputs and the audio interface inputs so that you could turn the OT mixer’s main level up all the way and see if it was possible to clip the OT’s DAC by running the mixer too hot. I assume it would clip but you never know.

Anyway, if anyone is up to try to replicate some of this on their setup I think it would be really interesting to see if the results were the same (and it would help eliminate the variables introduced by my interface maybe not being that accurately calibrated). Likewise, if anyone notices any glaring mistakes please point them out! Also, in some of the images the title on the return signal oscilloscope window might not match the test conditions I described for that image, but that’s because I forgot to rename it for a couple of them. Likewise the filenames don’t always match the test conditions exactly, but were close enough for me to keep track of what was what while I wrote this post.

Hope this helps! It definitely made me rethink my approach to OT gainstaging a lot.

Best thread on this forum in months! I struggle with understanding/optimizing the gain structure of the OT all the time. It would be amazing if Elektron could task someone with writing a thorough explanation and some suggestions (shouldn’t take more than a couple of hours, right?) …so that we wouldn’t need to fumble around with guesswork, oscilloscopes, and tone generators (and hours of your guys’ time) and just get the straight dope on this persistent mystery…

Oh, looking back at it now I realize there’s a good chance that when I was thinking the audio in the record buffer was hitting 0dbfs it was actually hitting -12db because of the internal pad, and when the audio editor display is at the zoom level I was using in those photos the range it shows only goes to -12db (anyone who knows this thing better able to confirm/deny? I won’t have a chance to do anymore tests with it for about a week and a half, since I’ll be away for the holiday and then working a couple extra shifts when I get back). That would make a lot more sense, with the 12db pad happening between the inputs and the buffer there would be no way for a recording to actually hit 0db in the buffer at all, and it wouldn’t make any sense for the padding to happen post-buffer. So that’s definitely a mistake in my thinking up there, but I don’t think it invalidates everything else.

There’s a lot to digest after you set up this detailed examination (and much of it is clarified throughout, mostly), however, for the sake of a bit of clarity in terminology and to permit meaningful testing by others there’s no relative(dB) relation between the outputs of device-X and the inputs to the OT

Yeah, this makes sense, but it’s good to flag and solidify the idea about how best to think about the inputs - aiming for as decent a level (where possible) at source will help

Hm, not quite clear on what this might mean either, it’s evidently not gain in the way that the Mixer gain is

you’re observing/equating here of course, because there’s no relation between the dBFS(digital) and the corresponding device specific level at your interface appearing at the analog input of the OT, so folk need to make their own numerical observations here (or quote dBu/Vs at input)

cool, good to know this

Pro gear is sometimes referencing the nominal +4dBu [3.5V p-p] and may be switchable to consumer level of -10dBV [0.9V peak to peak]

The OT can handle hotter inputs and outputs (especially MKii)

ELECTRICAL SPECIFICATIONS

Impedance balanced audio outputs:

Main/cue outputs level: +10 dBu [6.9V peak to peak MKii=15.5V] [ Headphones: +15 dBu ]

Output impedance: 560 Ω unbalanced

S/N ratio: 102 dBFS (20-20.000 Hz)

Unbalanced audio inputs:

Input level: +8 dBu maximum [5.5V peak to peak MKii=15.5V]

Audio input impedance: 9 kΩ

SNR inputs: 99 dBFS (20-20.000 Hz)

maybe we can re-draft below some of the issues with analogue levels and digital levels - each device may not adhere to a given nominal electrical standard (see OT manuals) and also note that the OT outputs are hotter than the inputs when comparing

I’d previously made assumptions about standard levels without really looking at the stats in the manuals, so I think that may account for a few inconsistencies

really excellent facts/contributions to the communal pool, we’re getting there for sure ![]()

So here’s a degree of confirmation about the OT’s electrical specs being circa 5.5V peak to peak on the Inputs

I set up the A4 (which should be reasonably calibrated) to output a CV signal of 5.5V peak to peak

Done as follows

CVA

Value Linear

Min -2.75V

Max 2.75V

Set to 64 (Mid point on Main page (not settings page) so defaulting/centrepoint to 0V)

Then apply a Sin/Saw Lfo at audio rates targeting ValueA with Depth 64 (exactly) (higher will distort shape)

Fed that into the OT with Mixer Gain set at 0 (after trying lower voltage min/max extremes first to be safe)

So it’s just about as per the electrical specifications … the signal is nearing FS for the quoted electrical specs +8dBu (5.5V p-p)

Obviously, just to repeat, there’s no attenuation on this as discussed above and dismissing theories in earlier threads and the inputs were also very Red - but there is no distortion

I can see why the new OT would be a handy tool in the modular world, but at these input levels the MKi OT is definitely not lacking … after the inputs, they both need the same careful gain management that we can document experiences of below

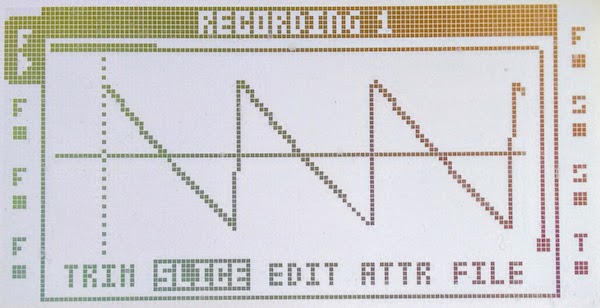

Here’s the screens showing the signals discussed

5.5V(+8dBu) input with Mixer Gain 0 for MKi OT (Metering solid Red) …

Proper

Nice wave and seem just fine… (+8bBu = 8 square or pixel dot in the vertical line measurement/metering)

not sure what you mean, but to avoid confusion in others the two systems are un-relatable … analog/digital (we can learn empirical relationships for various input devices or maybe master the Leds)

The Full Scale nudging signal on the MKi screen will need to be a hotter one for the MKii, each relates to its own input ADC

I just count the square pixel on the vertical line and there’s 12 square in the row… The 2 waveforms stopped both on the 8th… so…

==> maybe not accurate as a level metering though

And of course zooming can ruin the metering I don’t know

Yeah, I was very sloppy with terminology throughout, and that whole post was stream of consiousness so anything that’s confusing or outright wrong is on me for sure, and I’ll do my best to clarify it if I can. When i refer to the level of the test tone, that’s its level inside the DAW (because I have no reliable way of metering externally and the whole system isn’t calibrated in any meaningful way).

Yeah, I’m not really sure about that either, to be honest! Looking at it now I don’t think this part makes any sense - the signal is digitized, the -12db pad is applied before it’s saved into the record buffer (I assume, I still don’t really have a good idea of what’s going on with the pad), and then the gain attribute adds those 12db back, so for all intents and purposes, in the tests I was doing, the pad didn’t exist. Other than those two photos of the OT audio editor, the pad shouldn’t actually be affecting anything I was testing, so that whole statement about “track level at full = 12db below unity” seems completely wrong in retrospect, another mistake I made while I was writing it up. because the pad and the gain attribute theoretically cancelled each other out, the signal level immediately after the ADC (pre pad) should be the same as the signal level coming out of the flex machine (given a +12db gain setting in the buffer’s attributes) so unity gain between the OT’s inputs really was unity gain and I confused myself while I was writing it up.

Yep, all of the statments about the test tone level in dbfs are only relative to full scale inside my DAW becasue that’s the only accurate point of reference I had. This is all specific to my setup, which is why it would be great to see someone else do the same tests and see what their results are. Without real, calibrated test equipment (and real testing experience, which I don’t have) this is all broad strokes and it’s definitely worth reiterating that a lot so people don’t take my post for something more definitive than it is!

Thanks, I was blanking on the “u” in “dbu” so I just didn’t state any units at all and figured it would get sorted out. My biggest question in all of this is what it means that I was clipping the inputs heavily even when I was padding the test signal by 6 or 7 dbfs in the DAW, using an interface that is supposedly calibrated to +4dbu. I don’t actually have any of the documentation for my interface, though, maybe its outputs are actually hotter than that and I just don’t realize it. I had the inputs set to +4dbu but the outputs are fixed. I’ll track down a manual and see what it says, although since it’s been heavily modded there’s no telling if any of the original specs still apply. My thinking was that doing the loopback test at the beginning amounted to calibrating the output level enough for the purposes of this stuff at least. Thinking about itnow, maybe I should have been considering the -2dbfs setting on the Reaper tone generator 0db at the interface output for the purposes of all this and called the -8dbfs setting that I had to use to avoid clipping the OT “-6db”, but I don’t really know if that makes any more sense.

At any rate, assuming everything was more or less correctly calibrated (which is a big assumption), it seems like the OT inputs were clipping a couple DB earlier than the specs said they should, but well within the likely margin of error for my actual equipment. I guess if I want to make more sense of this I’d have to drag out my voltmeter (which was once very nice but is also around 30 years old and hasn’t been calibrated since 2011 if the sticker that was on the top of it when I got it is accurate, so who knows how accurate it is - calibrating it costs a few hundred dollars so it’s not going to happen) and taking actual voltage measurements at the outputs of my interface for the -2dbfs and -8dbfs test tone settings I was using.

In fact, maybe I should actually do that if I have time this evening.

EDIT: your next post, with the tests using the A4, pretty much covered that actually, but I might still take some measurements if only to find out what 0dbfs in my DAW actually corresponds to at the outputs on my interface. But probably not today.

One other thing I observed but didn’t mention was that the test signal doesn’t appear to be distorting appreciably in the OT’s analog signal path - the test tones we’re using appear completely clean right up to where they start clipping the inputs. Obviously, distortion in the analog section could be a lot more program dependent - I’ve had more than one piece of gear that distorts much more readily at some frequencies than others - but it does mean there probably isn’t much to worry about in terms of driving the analog signal path too hard like there is in some gear. It looks like you’ll be clipping the ADC long before you get any significant analog distortion.

EDIT:

My biggest questions right now are:

Where exactly does the -12db pad get applied, and how does that correspond to the audio editor display? Is “full scale” at the default zoom actually -12dBFS in the OT’s internal gain structure? If not, how is it possible that we’re able to approach or reach full scale in the record buffer if there’s a -12db pad being applied? It seems to me that there are three possibilities here:

- Full scale inside the record buffer is lower than the level at which the ADC clips, the audio editor scales the waveform up by default (easily testable by saving a test tone that reaches 0dbfs in the audio editor display an opening it in a DAW to see if it’s actually reaching 0dbfs or if was padded to -12dBFS peaks and the editor’s default zoom isn’t actually showing us what we think it’s showing us)

- The -12db pad isn’t actually applied destructively to the recorded audio, an is actually just a matter of what the gain attribute for a sample actually means - in this scenario, a gain attribute of +12 would actually be unity and a gain attribute of 0 would actually be -12db, but the actual audio recorded into a buffer wouldn’t actually get padded at all

- There’s no padding happening at all, the LEDs are calibrated to go into the red at around -12dB below the point where the ADC clips and it’s all a bit of misdirection to keep people from clipping the inputs.

Where are the points in the OT’s internal signal path that the signal can clip? I was able to internally clip it easily using the AMP level, but how is it structured? are there points in the path where it processes in floating point and can’t clip, with bottlenecks where it’s converted to fixed point and can clip, or is the entire signal path fixed point? And does that actually make much practical difference? My suspicion here is that the whole thing is fixed point, because if any of it is floating point why wouldn’t it all be? I’m not sure how we could really test this though, since the AMP level is the only point where it’s convenient to increase the level cleanly - the next point in th signal path where it could be done is effect1, but the only effect I can think of that can boost levels much is the distortion in the lo-fi effect, but since that also distorts it might be hard to tell what is the effect and what is unwanted clipping. I guess playing back a sample that’s 0dBFS peak to peak and then boosting it just a bit with the distortion should (if the whole signal path is fixed point) push it into clipping long before the distortion effect was doing much, so it should be easy to tell the difference but I won’t be able to actually try that for a few days. For now I’m going to assume that the whole internal signal path for a given track is 24 bit fixed point, and that whatever your headroom is coming out of a machine, that’s your headroom for the entire track, an you have to gainstage deliberately, like an old DAW from the early 2000s before they all (except Pro Tools, which insists on staying a decade behind its competition and was still fixed point up until v11 came out a couple years ago!) went to a fully floating point signal path.

My new approach based on this is going to be

- Record as hot as possible, aim for orange with peaks in the red and as little in the green as possible.

- Keep the AMP level at unity or lower

- Leave track levels at their default 108 and boost the main output level to +12 for unity gain when sampling from the inputs (have to test thru machines and internal sampling and see if they work the same way, though)

I need to read through all this still, great tests by the way, thanks!

One thing I’d like to mention is that I was recommended to use the -10dBV setting on my interface to send to the OT from an apogee employee when giving them the specs of the OT mk1’s inputs…

I have an ensemble thunderbolt, in the manual it shows the max output strength the unit will send out using either the +4 or -10 reference.

At the +4dBu setting, the unit will output +20dBu max (when the DAW meters read 0 dbfs)

At the -10dBV setting, the unit will output +6dBV max (when the DAW meters read 0 dbfs)

Using this handy dBu, dBV, voltage calculator: -http://www.sengpielaudio.com/calculator-db-volt.htm

I can plug in the +6dBV max from the -10 reference, and see that it is 8.2dBu

The OT mk1’s inputs are rated 8dBu, so this is just about right…

Means with my ensemble thunderbolt set at -10dBV setting, a signal sent out of it should clip the OT’s inputs just before my DAW meter hits 0… Also means +4 reference setting is way too hot…